The computing industry has seen unprecedented growth and this trend is far, far away from stagnation or decline. The introduction of electronics to computing, new paradigms of programming, the advent of World Wide Web, Artificial Intelligence, IoT; we have an unending list of 'heroes' of the computing world, but there are many computing innovations which didn't get their share of fame and acknowledgment.

These overlooked inventions are as important as the popular ones and some of them form the base of your preferred technology product or service.

Here, we have compiled and explored 11 such inventions you’ll love to read about.

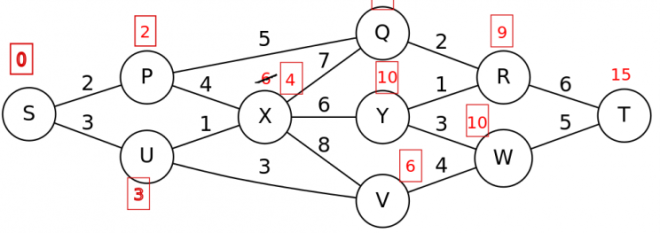

Dijkstra's Algorithm

Dijkstra is one of the most popular algorithms in the field of computer science. Known also as a greedy algorithm, it is basically meant to find the shortest path from a starting node to all other nodes in a weighted graph. This vital logic was first conceived by E.W. Dijkstra in 1956.

While most of us overlook the complexity of this algorithm, it exists in many variants today for a range of applications. The most common use is the online maps that give us the shortest path between any two locations.

In addition, this underrated computing invention is also important in IP routing to find the shortest open path first. Internet that we use today, wouldn’t have been the same without this logic.

The algorithm is equally crucial in the telephone network to establish a voice call between two mobile phones.

RC 4000 Multiprogramming System

This multiprogramming system was originally developed for RC 4000 minicomputer by Per Brinch Hansen in 1969. While RC 4000 wasn’t that successful, the underlying system became extremely influential for introducing the microkernel concept – an attempt to convert the operating system into a group of interacting programs communicating through a kernel.

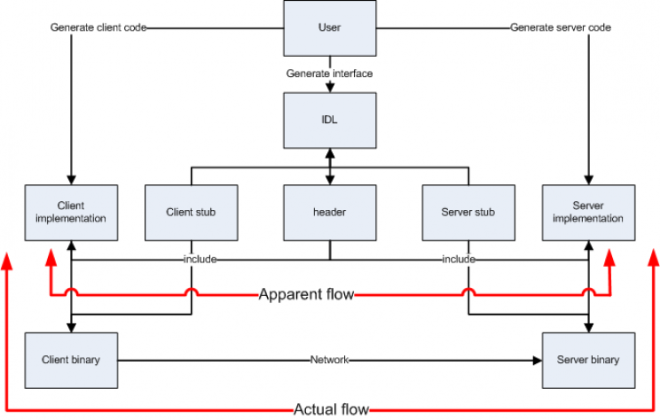

This vital computing innovation also made possible the development of Remote Procedure Call (RPC), which is used today by developers to create distributed server programs.

As most modern operating systems got complex over years with more features, RPC helps the developers to avoid writing codes again for common client/server interaction such as security, synchronization and data flow handling.

It essentially helps in minimizing re-developing effort and saves a huge amount of time and money for the developers building their own system or application.

Transmission Control Protocol (TCP)

We all are reaping the benefits of fast, reliable and well-connected worldwide network, called the Internet. But too few amongst us know what really powers the Internet.

In the initial days of computer networking, there were different pools of network around the world. Transmission Control Protocol along with Internet Protocol allowed these isolated networks to interconnect and give rise to the Internet.

TCP was first introduced to the world in 1974 by Vint Cerf and Bob Kahn. TCP specifies a common set of syntax, semantics and synchronization for the exchange of information between computers. It uses packet-switching as the means of transfer.

TCP/IP made World Wide Web, e-mail, FTP and many other technologies possible.

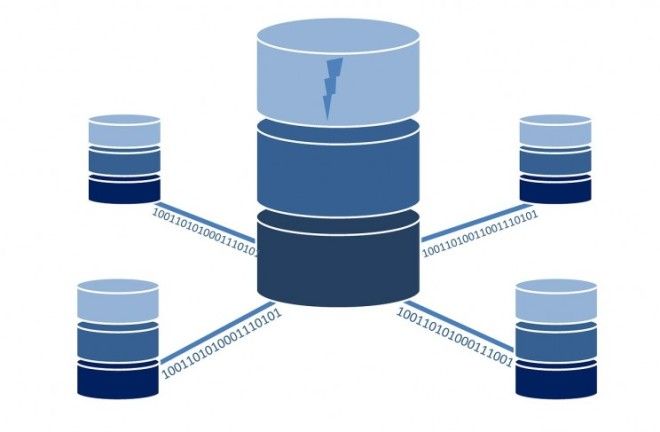

Database Management System (DBMS)

Computing has always been concerned with data. The basic function of a computer is to read data, process it, store and display it. So, how do we ensure flawless and reliable storage and retrieval of the data?

Before the 1960s, Operating Systems (OS) handled this task with their file system; but file system used to introduce redundancy, discrepancy and lack of concurrency. To counter these issues, DBMS was invented.

DBMS is a computer application which helps us store, organize, retrieve and modify data. In 1970, Edgar Codd developed relational DBMS, which was better than all other DBMSs and soon all the major industries, like banking, military, etc. started using it.

DBMS revolutionized the world of data handling. Now, it is an integral part of web applications, banking, reservation systems and much more. But how often do we realize its importance today?

World Wide Web

The Internet has been around for a long time, but it was exclusively available to academic, government and scientific communities. In 1990, Tim Berners-Lee materialized one of the most influential projects of our time, the World Wide Web.

WWW is an 'information mesh' on the internet which identifies web resources with Uniform Resource Identifier(URI) and allows to navigate the net with the help of hyperlinks. Tim Berners-Lee developed world's first web browser, HTTP and HTML shortly afterward.

WWW made the internet accessible to all. Presently, there are more than 3.7 billion users worldwide and about 1.3 websites running. WWW is responsible for the information boom we witnessed.

But this crucial invention is mostly overlooked not just by every regular web surfer, but even by the web browsers.

Computer Mouse

The mouse is an integral part of Graphical User Interface (GUI). It has made computers easy to navigate and use. Many pointing devices were conceived and prototyped in post-WWII times for military and scientific use, but it was in 1964 that first modern mouse was conceived by Douglas Englebert at Stanford Research Institute.

He publicly demonstrated it in 1968. Bill English, a colleague of Engelbert, went a step ahead and invented the 'ball mouse' for Xerox Alto in 1972. Microsoft adopted the mouse in 1982 and made their software mouse-compatible.

But the greatest rise in mouse's adoption and popularity was fueled by Apple's Macintosh 128K and Atari ST, which magnified and eased user experience of the mouse. The optical mouse was invented in the 1980s, but it only became economically viable after 1995.

Recently, special purpose mice have become the trend with the advent of the gaming mouse, 3D mouse, etc.

Computer Storage

Memory storage is one of the most fundamental components of the computer along with input/output and ALU. It has been at the core of the computer development and acted as a constraint to computer performance.

Computer storage has evolved dramatically over time. Data storage started first with punch cards and soon magnetic storage was introduced. These were the times when only kilobytes of memory was available for use.

Soon, semiconductor memory was in trend and memory storage soared up too. Flash drives and optical disks were introduced to make storage convenient.

We are now enjoying the privilege of GBs and TBs of data at an inexpensive cost, keeping this computing invention underrated. Cloud storage has further given an all-new dimension to data storage.

Pixels

Unlike other computing inventions listed here, Pixel was first used in televisions in the late 1920s. Pixels or 'picture elements' are the smallest unit of a digital image. The digital images are made up of a large number of pixels, each one of which can be controlled to display certain color and intensity.

These pixels were first migrated from print to screen by Russell A. Kirsch with his digital image scanner. Recent development in the imaging technology has made images with million pixels (megapixels) possible.

Pixels have helped in image compression as well, contributing to the convenient transfer of multimedia data. How often have you realized its importance when clicking a picture with your smartphone?

Username and Password

Passwords have been used from antiquity to preserve secrets and authorize access. Riddles are a good example of security questions. MIT's CTSS computer and IBM's Sabre system, in the 1960s, were the first computers to require a password.

Fernando Corbató is widely regarded as the father of modern computer password. These credentials have helped in enforcing cybersecurity and made computing safe.

Cryptology is the new advancement in this field and it has proved to be more efficient.

USB

If you have been using computers for anytime recently, you may not appreciate the USB as you should do. Imagine the hardships of computer users who wanted to attach peripherals to the computer, but a vast number of different ports bewildering them.

This was the scenario before the dawn of the USB. You have to rely on a range of ports and connectors, like PS/2, serial & parallel ports for hardware connectivity.

Universal Serial Bus or USB was developed by a collaboration of tech giants like Microsoft, Intel, etc. to facilitate connectivity and data transfer. USB was widely adopted all over the world and its ubiquitousness is the reason for its widespread popularity.

With USB 3.0, data transfer speed of about 5 gbps has been recorded!

WiFi

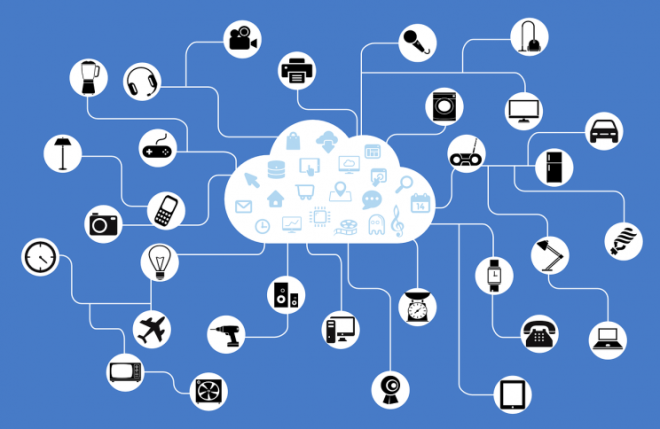

Wireless Local Area Network (WLAN) or popularly called WiFi unsurprisingly helps you to connect to the Internet without an Ethernet cable. This brilliant computing invention is an amalgamation of many different patents.

The wireless technology behind WiFi was discovered as a result of an astrophysics experiment by a team of CSIRO scientist led by Dr John O’Sullivan. Later, IEEE standardized WLAN under 802.11 and WiFi Alliance was formed to certify and promote WiFi technologies.

In recent time, WiFi carries more than 60% of the world's Internet traffic. It has made Internet feasible for all, defying geographical and infrastructural hindrance. WiFi has made communication between devices and IoT possible, and it has contributed to wearable electronics, robotics, and other important fields, you didn't even think of.

However, this vital computing innovation is not just your portal to the net, it is driving a revolution in terms of worldwide connectivity.